Apple Intelligence caused chaos with a false murder report

According to a BBC report, Apple’s newly released AI platform, Apple Intelligence, faced backlash after incorrectly announcing the death of Luigi Mangione, a murder suspect. The notification, sent via iPhone last week, summarized a BBC report incorrectly, leading to criticism from both users and media organizations. This incident raises significant questions about the reliability and accuracy of AI-generated information.

Apple’s AI tool misreports murder suspect’s death notificationApple has integrated Apple Intelligence into its operating systems, including iOS 18 and macOS Sequoia. Among its features, the platform offers generative AI tools designed for writing and image creation. Additionally, it provides a function to categorize and summarize notifications, aimed at reducing user distractions throughout the day. However, the recent false notification highlights potential inaccuracies and shortcomings of this new feature, leaving users perplexed and concerned about the integrity of information being shared.

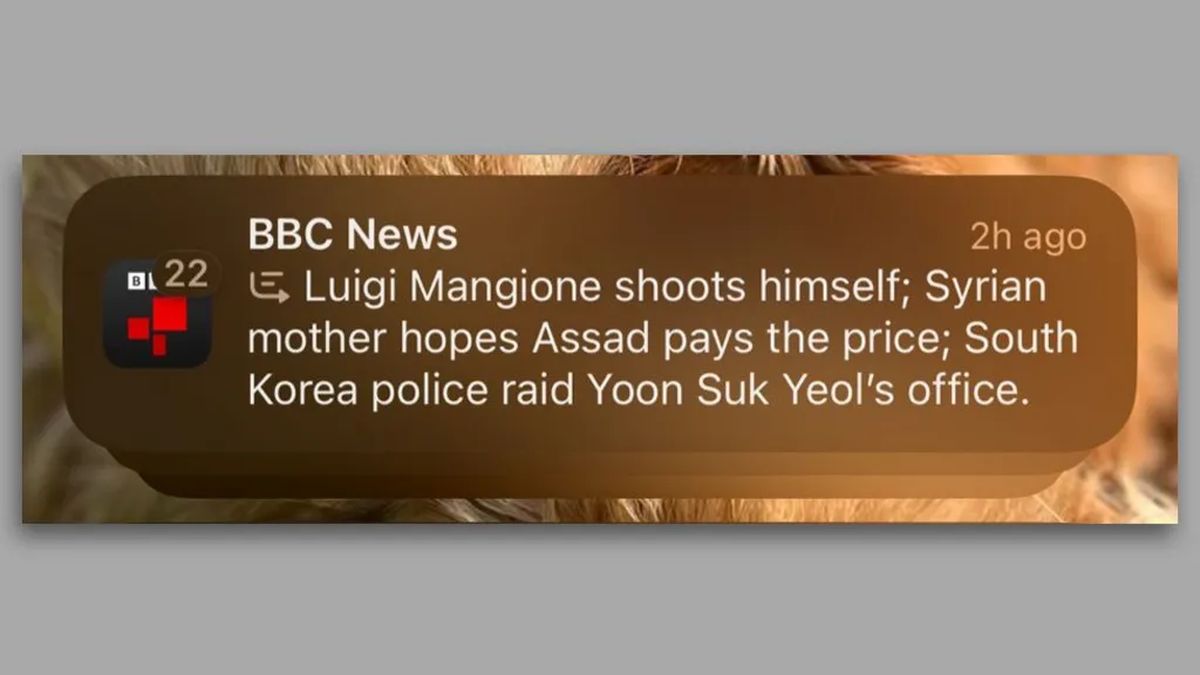

In a notification dated December 13, 2024, iPhone users received a message stating, “Luigi Mangione shoots himself,” alongside two other breaking news summaries. This erroneous notification quickly drew attention, particularly because it misreported a crucial detail regarding Mangione, who is accused of killing former UnitedHealthcare CEO Brian Thompson on December 4. BBC, which had not published any information about Mangione allegedly shooting himself, lodged a complaint with Apple. The network has since called for Apple to reconsider its generative AI tool.

The underlying issue appears to stem from the language models (LLMs) utilized by Apple Intelligence. According to The Street, Komninos Chatzipapas, Director at Orion AI Solutions, “LLMs like GPT-4o… don’t really have any inherent understanding of what’s true and what’s not.” These models statistically predict the next words based on vast datasets, yet this method can result in reliable-sounding content that misrepresents facts. In this case, Chatzipapas speculated that Apple may have inadvertently trained its summarization model with similar examples where individuals shot themselves, yielding the misleading headline.

Image: BBC

Image: BBC

The implications of this incident extend beyond Apple’s internal practices. Reporters Without Borders has urged the company to remove the summarization feature, stressing the severity of disseminating inaccurate information tied to reputable media outlets. Vincent Berthier from Reporters Without Borders articulated concerns about AI-generated misinformation damaging the credibility of news sources. He stated, “A.I.s are probability machines, and facts can’t be decided by a roll of the dice.” This reinforces the argument that AI models currently lack the maturity necessary to ensure reliable news dissemination.

Don’t allow AI to profit from the pain and grief of families

This incident is not isolated. Since launching Apple Intelligence in the U.S. in June, users have reported further inaccuracies, including a notification that inaccurately stated Israeli Prime Minister Benjamin Netanyahu had been arrested. Although the International Criminal Court issued an arrest warrant for Netanyahu, the notification omitted crucial context, only displaying the phrase “Netanyahu arrested.”

The controversy surrounding Apple Intelligence reveals challenges related to the autonomy of news publishers in the age of AI. While some media organizations actively employ AI tools for writing and reporting, users of Apple’s feature receive summaries that may misrepresent facts, all while appearing under the publisher’s name. This principle could have broader implications for how information is reported and perceived in the digital age.

Apple has not yet responded to inquiries regarding its review process or any steps it intends to take in relation to the BBC’s concerns. The current landscape of AI technology, shaped significantly by the introduction of platforms like ChatGPT, has spurred rapid innovation, yet it also creates a fertile ground for inaccuracies that can mislead the public. As investigations continue into AI-generated content and its implications, the significance of ensuring reliability and accuracy becomes increasingly paramount.

Featured image credit: Maxim Hopman/Unsplash