ByteDance VAPO: The AI upgrade you’ll hear about soon

ByteDance Seed researchers rolled out Value Augmented Proximal Policy Optimization (VAPO), a reinforcement learning training framework designed to sharpen large language models’ reasoning on complex, lengthy tasks, achieving new state-of-the-art results on the AIME24 benchmark.

Training LLMs for intricate reasoning using value-based reinforcement learning previously faced significant hurdles. Methods struggled with value model bias, adapting effectively to response sequences of widely varying lengths, and managing sparse reward signals, especially in verifier-based tasks providing only binary feedback.

VAPO addresses these challenges through three core innovations: a detailed value-based training framework, a Length-adaptive Generalized Advantage Estimation (GAE) mechanism adjusting parameters based on response length, and the systematic integration of techniques from prior research.

This combination creates a system where improvements work synergistically. Using the Qwen2.5-32B model without specific SFT data, VAPO improved benchmark scores from 5 to 60, surpassing previous state-of-the-art methods by 10 points.

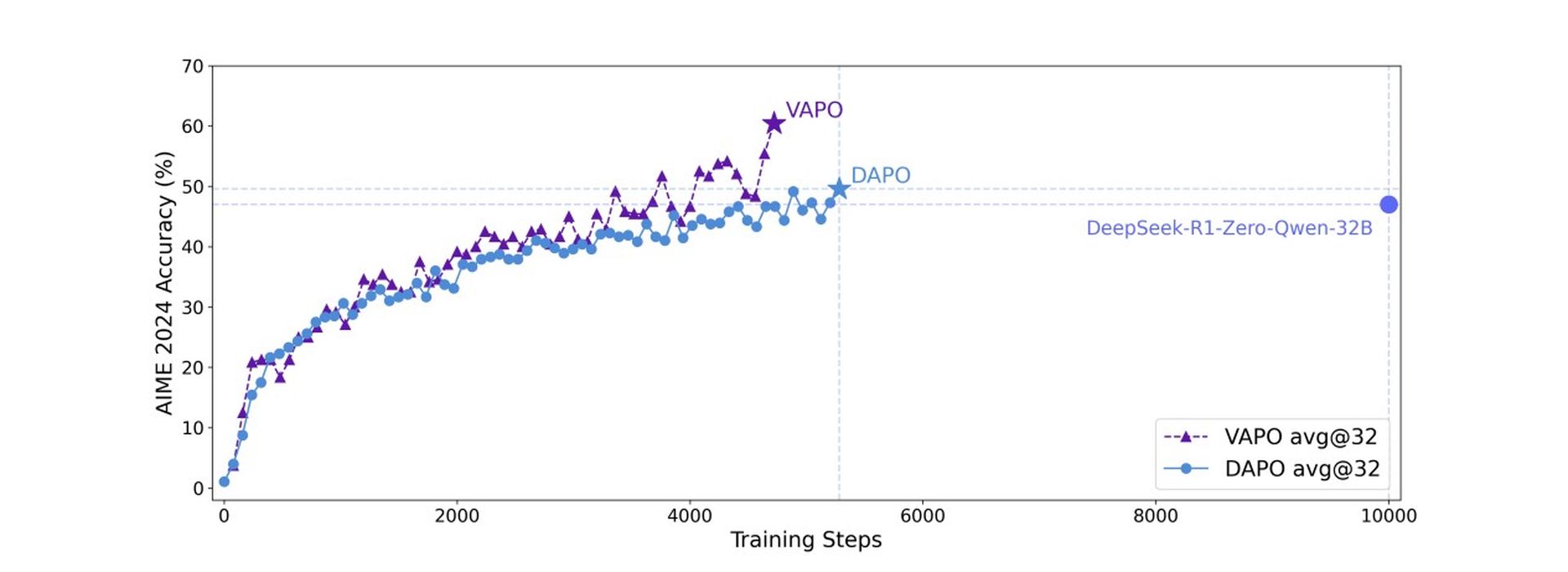

VAPO builds upon the Proximal Policy Optimization (PPO) algorithm but incorporates key modifications to enhance mathematical reasoning. Training analysis revealed VAPO exhibits smoother training curves compared to the value-free DAPO method, indicating more stable optimization.

VAPO also demonstrated better length scaling for improved generalization, faster score growth attributable to the granular signals from its value model, and lower entropy in later training stages. While reduced entropy can potentially limit exploration, the method effectively balances this, improving reproducibility and stability with minimal performance impact.

Image: ByteDance Seed

Image: ByteDance Seed

On the AIME24 benchmark, DeepSeek R1 using GRPO achieved 47 points, and DAPO reached 50 points. VAPO, using the Qwen-32b model, matched DAPO’s performance with only 60% of the update steps and set a new state-of-the-art score of 60.4 within 5,000 steps. In contrast, vanilla PPO scored just 5 points due to value model learning collapse.

This benchmark asks if AI can think like an engineer

Ablation studies confirmed the effectiveness of seven distinct modifications within VAPO. Value-Pretraining prevents model collapse; decoupled GAE enables full optimization of long responses; adaptive GAE balances short and long response optimization; Clip-higher encourages thorough exploration; Token-level loss increases weighting for long responses; incorporating positive-example LM loss added 6 points; and Group-Sampling contributed 5 points to the final score.

Researchers highlight that VAPO, utilizing the Qwen2.5-32B model, demonstrates that this value-based approach can decisively outperform value-free methods like GRPO and DAPO, establishing a new performance level for complex reasoning tasks and addressing fundamental challenges in training value models for long chain-of-thought scenarios.