Llama 3: Everything you need to know about Meta’s latest LLM

Meta made its new Llama 3 LLM open-source and you can run it locally with Ollama. By making Llama 3 open-source, Meta has unlocked a wealth of potential for businesses of all sizes. This signifies that the underlying code for Llama 3 is freely available for examination, adaptation, and utilization by any interested party. The implications for the business community, especially small and medium-sized enterprises, are substantial.

Through a permissive licensing structure, SMEs can leverage the power of Llama 3 for their specific projects without encountering burdensome restrictions. As long as adherence to established legal standards is maintained, SMEs have the freedom to explore the extensive potential of this cutting-edge technology.

What is Llama 3?Llama 3 is an LLM developed by Meta, the company says it is “the most capable openly available LLM to date.”

Llama 3 comes in two sizes: 8 billion and 70 billion parameters. This kind of model is trained on a massive amount of text data and can be used for a variety of tasks, including generating text, translating languages, writing different kinds of creative content, and answering your questions in an informative way. Meta touts Llama 3 as one of the best open models available, but it is still under development.

“With Llama 3, we set out to build the best open models that are on par with the best proprietary models available today,” Meta’s blog post reads.

Llama 3 models utilize data to achieve unprecedented scaling. They have been trained using two newly unveiled custom-built 24K GPU clusters on more than 15 trillion tokens of data. This training dataset is seven times larger than that used for Llama 2 and includes four times more code, significantly enhancing their capabilities and breadth of knowledge.

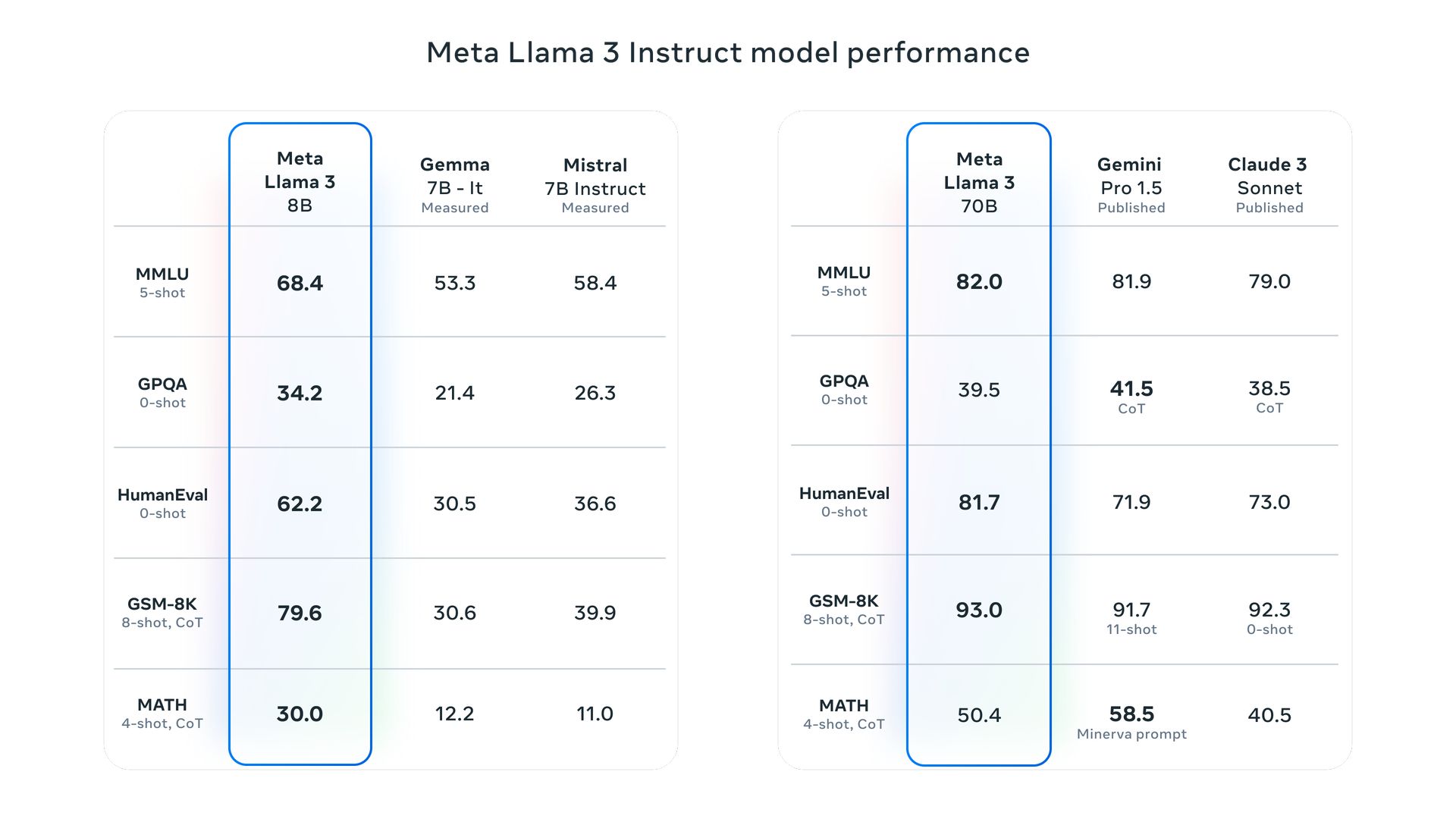

Llama 3 comes in two sizes: 8 billion and 70 billion parameters (Image credit: Meta)

Llama 3 comes in two sizes: 8 billion and 70 billion parameters (Image credit: Meta)

We have discussed Llama 3 benchmarks showing how the model performed exceptional performance across all evaluated tasks.

How does Meta Llama 3 improve over previous versions?The Meta Llama 3 models have undergone significant improvements in pretraining and fine-tuning processes, which enhance their ability to align better with user instructions, generate more diverse responses, and reduce error rates. Key technological enhancements include a more efficient tokenizer and Group Query Attention (GQA), improving inference efficiency.

Is Llama 3 multimodal?Currently, Llama 3 operates with both textual and visual data for certain applications, but Meta aims to enhance its image processing capabilities. By the end of 2024, they plan to launch Llama 4, designed to excel at interpreting and generating intricate images based on textual descriptions.

This advancement will enable sophisticated image modifications, enhancements in scene depiction, and the creation of realistic images across various styles, merging the capabilities of language comprehension and visual perception for more impressive outputs.

How to access Llama 3?Accessing Llama 3 is currently possible locally through Ollama, which facilitates the deployment and operation of large language models.

What does Ollama do?Ollama provides a platform for running open-source large language models such as Llama 2 on local systems. It integrates model weights, configuration, and necessary data into a unified package specified by a Modelfile and simplifies the setup process, including optimizing GPU usage for enhanced performance.

Is Ollama available on Windows?Regarding compatibility, Ollama is now accessible on Windows in a preview version. This release allows users to manage large language models directly on Windows, featuring GPU acceleration, complete access to the model library, and an Ollama API that supports OpenAI protocols.

Meta has declared that Llama 3 is open source promoting broader accessibility and innovation within the tech community (Image credit)

How does Ollama work?

Meta has declared that Llama 3 is open source promoting broader accessibility and innovation within the tech community (Image credit)

How does Ollama work?

Ollama employs a transformer architecture, a type of deep learning model that’s pivotal in large language models. By analyzing extensive text data, Ollama masters the nuances of language, enabling it to understand the context of queries, produce syntactically correct and contextually meaningful responses, and perform accurate language translation by capturing the essence of the source language and conveying it effectively in the target language.

Will Llama 3 be open source?In terms of availability, Meta has declared that Llama 3 is open source promoting broader accessibility and innovation within the tech community.

Can I run Llama 3 locally?Yes, Ollama facilitates the execution of various large language models, including Llama 3, on personal computers. It leverages the efficiency of llama.cpp, an open-source library that enables the local operation of LLMs even on systems with modest hardware specifications. Additionally, Ollama incorporates a type of package manager, which simplifies the process of downloading and utilizing LLMs through a single command, enhancing both speed and ease of use. You can learn how to run Llama 3 locally with Ollama by visiting our special guide!

What safety features are integrated into Meta Llama 3?Meta has integrated several safety features in Llama 3, such as Llama Guard 2 and Cybersec Eval 2, to handle security and safety concerns. These tools help in filtering problematic outputs and ensuring safe deployment. Additionally, the models have been subjected to red-teaming to test and refine their response to adversarial inputs.

What are the future goals for Meta Llama 3?Meta plans to extend Llama 3 to support multilingual and multimodal capabilities, handle longer context lengths, and further enhance performance across all core capabilities of language models. The aim is also to keep the models open to community involvement and iterative improvements.

Featured image credit: Meta