Microsoft now confirms you can opt out of, and remove, Windows Recall

Microsoft has released a white paper of sorts outlining what the company is doing to secure user data within Windows Recall, the controversial Windows feature that takes snapshots of your activity for later searching.

As of late last night, Microsoft still hasn’t said whether they will release Recall to the Windows Insider channels for further testing as originally planned. In fact, Microsoft’s paper says very little about Recall as a product or when they will push Recall live to the public.

Recall was first launched back in May as part of the Windows 11 24H2 update and it uses the local AI capabilities of Copilot+ PCs. The idea is that Recall captures periodic snapshots of your screen, then uses optical character recognition plus AI-driven techniques to translate and understand your activity. If you need to revisit something from earlier but don’t remember what it was or where it was stored, Recall steps in.

However, Recall was seen as a privacy risk and was subsequently withdrawn from its intended public launch, with Microsoft saying that Windows Recall would later re-release in October.

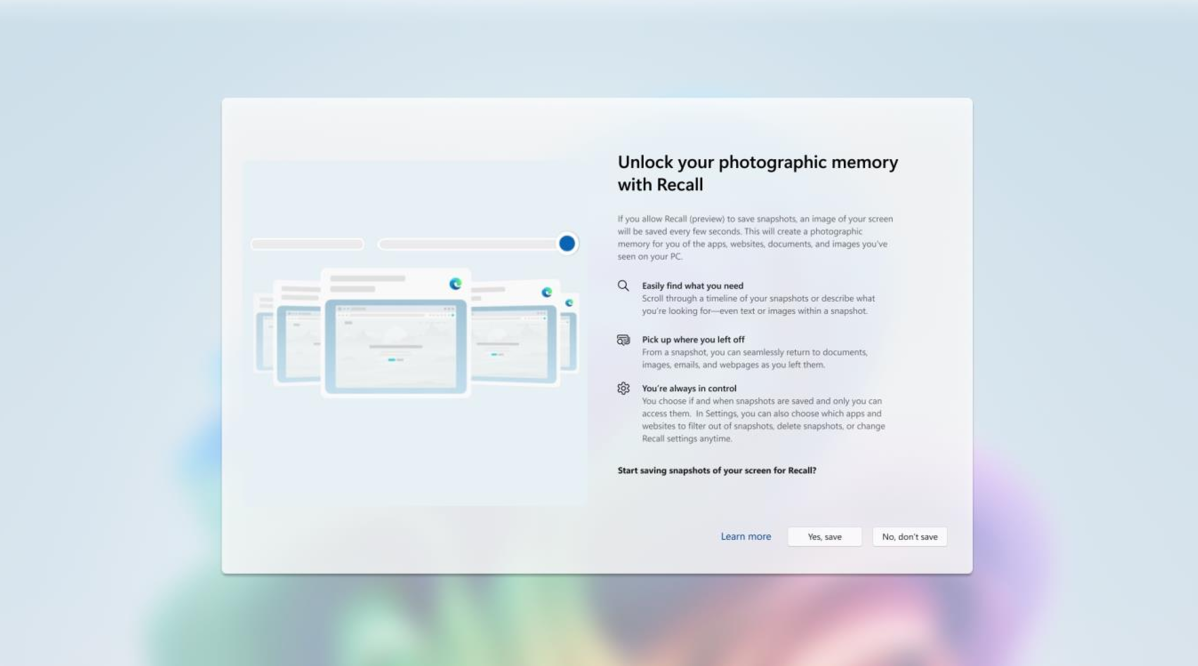

Microsoft latest post, authored by vice president of OS and enterprise security David Weston, details how the company intends to protect data within Recall. Though journalists previously found that Recall was “on” by default with an opt out, it will now be opt in for everyone. Users will be offered a “clear” choice on whether they want to use Recall, said Weston, and even after Recall is opted into, you can later opt out or even remove it entirely from Windows.

What you’ll see as part of the “out of the box experience” with Windows 11 and Windows Recall.

What you’ll see as part of the “out of the box experience” with Windows 11 and Windows Recall.

What you’ll see as part of the “out of the box experience” with Windows 11 and Windows Recall.

What you’ll see as part of the “out of the box experience” with Windows 11 and Windows Recall.Microsoft

What you’ll see as part of the “out of the box experience” with Windows 11 and Windows Recall.

What you’ll see as part of the “out of the box experience” with Windows 11 and Windows Recall.Microsoft

Microsoft

The post goes into more detail about how data is stored within Windows, which has become one of the focal points of Recall’s controversy. In May, cybersecurity researcher Kevin Beaumont tweeted that Recall stored snapshots in “plain text,” and he published screenshots of what the database looked like within Windows. Beaumont has since deleted his tweet and removed the image of the database from his blog post outlining his findings.

Alex Hagenah then published “TotalRecall,” a tool designed to extract information from the Recall stored files, as reported by Wired. That tool, stored on a GitHub page, still includes screenshots of the Recall database, or at least what was accessible at the time.

Microsoft never publicly confirmed Beaumont’s findings, and doesn’t refer to them in its latest post. The company’s representatives also did not return written requests for further comment.

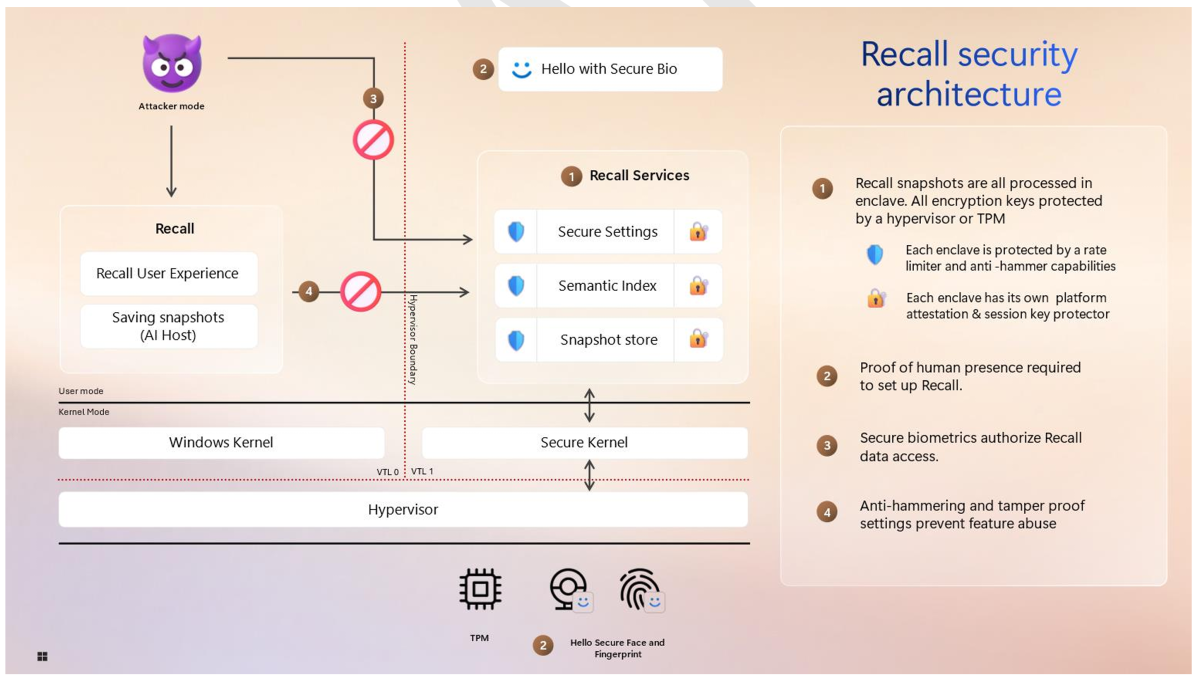

Microsoft now says that Recall data is always encrypted and stored within what it calls the Virtualization-based Security Enclave, or VBS Enclave. That VBS Enclave can only be unlocked via Windows Hello Enhanced Sign-in Security, and the only data that ever leaves the VBS Enclave is whatever the user explicitly asks for. (PIN codes are only accepted after the user has added a Windows Hello key.)

A technical diagram of how Recall stores data, according to Microsoft.

A technical diagram of how Recall stores data, according to Microsoft.

A technical diagram of how Recall stores data, according to Microsoft.

A technical diagram of how Recall stores data, according to Microsoft.Microsoft

A technical diagram of how Recall stores data, according to Microsoft.

A technical diagram of how Recall stores data, according to Microsoft.Microsoft

Microsoft

“Biometric credentials must be enrolled to search Recall content. Using VBS Enclaves with Windows Hello Enhanced Sign-in Security allows data to be briefly decrypted while you use the Recall feature to search,” Microsoft said. “Authorization will time out and require the user to authorize access for future sessions. This restricts attempts by latent malware trying to ‘ride along’ with a user authentication to steal data.”

Weston also strove to make it clear that if you use “private browsing,” Recall never captures screenshots. (It’s not clear whether this applies only to Edge or to other browsers as well.) Recall snapshots can be deleted, including by a range of dates. And an icon in the system tray will flash when a screenshots is saved.

What we don’t know is whether Recall will store deeply personal data (like passwords) within Recall. “Sensitive content filtering is on by default and helps reduce passwords, national ID numbers, and credit card numbers from being stored in Recall.” (Emphasis mine.)

Microsoft said they have designed Recall to be part of a “zero trust” environment, where the VBS Enclave can only be unlocked after it’s deemed secure. But trust will be an issue for consumers, too. It appears that Microsoft is at least offering controls to turn off Recall if users are worried about what the feature will track.

Further reading: The various ways Windows 11 collects your personal data and how to opt out