Case study: August 2024 Google core update and a recovery plan

In August, Google rolled out one of its most significant updates of the year. Many SEOs believed it was a partial rollback of the controversial Helpful Content Update – a potential mea culpa from Google.

While some sites have benefited from the changes, others have experienced sharp declines in traffic.

This case study dives into how the update impacted our client’s site, the key metrics affected and the strategies we’re implementing to recover lost visibility and boost SEO performance.

Diagnosing the dropI recently attended the SEO Office Hours podcast, where a participant asked:

- “We haven’t been affected by the August update, but the indexing bug that happened around the same time has decreased our traffic. How do we solve this?”

My first thought was, what confidence! How sure are you that it was the indexing error if you are looking just at traffic?

The error was resolved in a few days so how come there was no recovery of the traffic and rankings?

Yes, we should ignore drops that happened in those two days during the bug, but if they continue after this, might it impact the core update?

Diagnosing what actually happened is not always easy. We see it all the time.

Sometimes, clients don’t know where to start. Other times, they simply don’t have time to investigate closely enough. The latter was true for our new client.

Their excellent SEO team needed help auditing the website and a sounding board for solving any issues.

After a Google core update rollout is completed, you could examine multiple metrics.

Like everything in SEO, these depend on several factors, such as your key performance indicators (KPIs), the type of website you manage and the countries you target.

To diagnose the impact of the Google core update for our client, we used a simple, three-step approach:

- Understand what has happened sitewide.

- Use the sitewide indicators to segment the data for deeper analysis.

- Deep dive into the most affected pages and sections of the website.

Our client has a large site. Digging into each page would take too much time. We needed to understand what happened overall to segment and prioritize.

For this, we looked at:

- Traffic and conversion trends.

- Overall content health.

- Link profile.

- Technical SEO.

- Wild card: persona-based sitewide signals.

Traffic and conversion trends

Two of the main KPIs for our client were traffic and conversions, which made it clear that our analysis needed to begin in these areas.

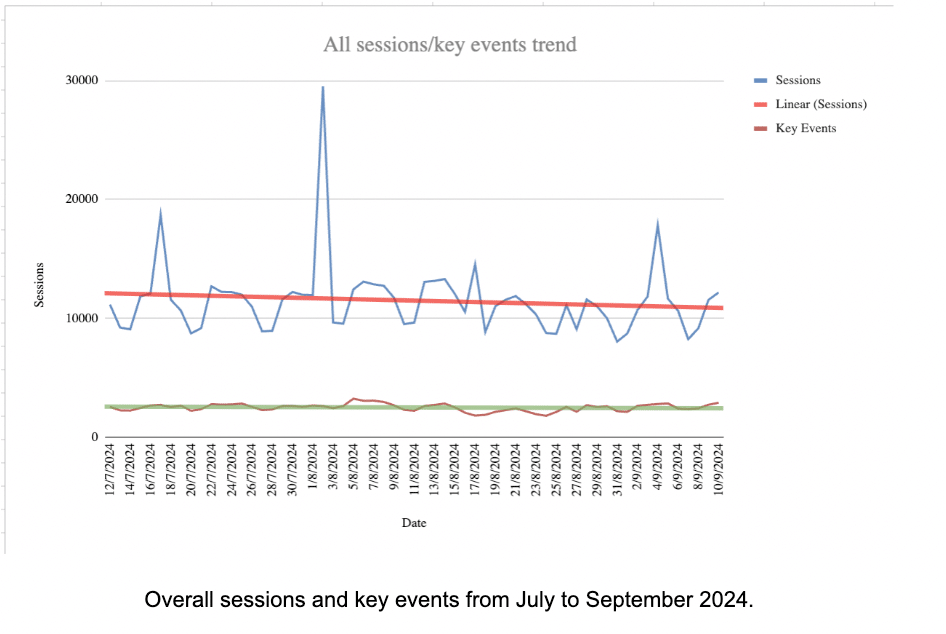

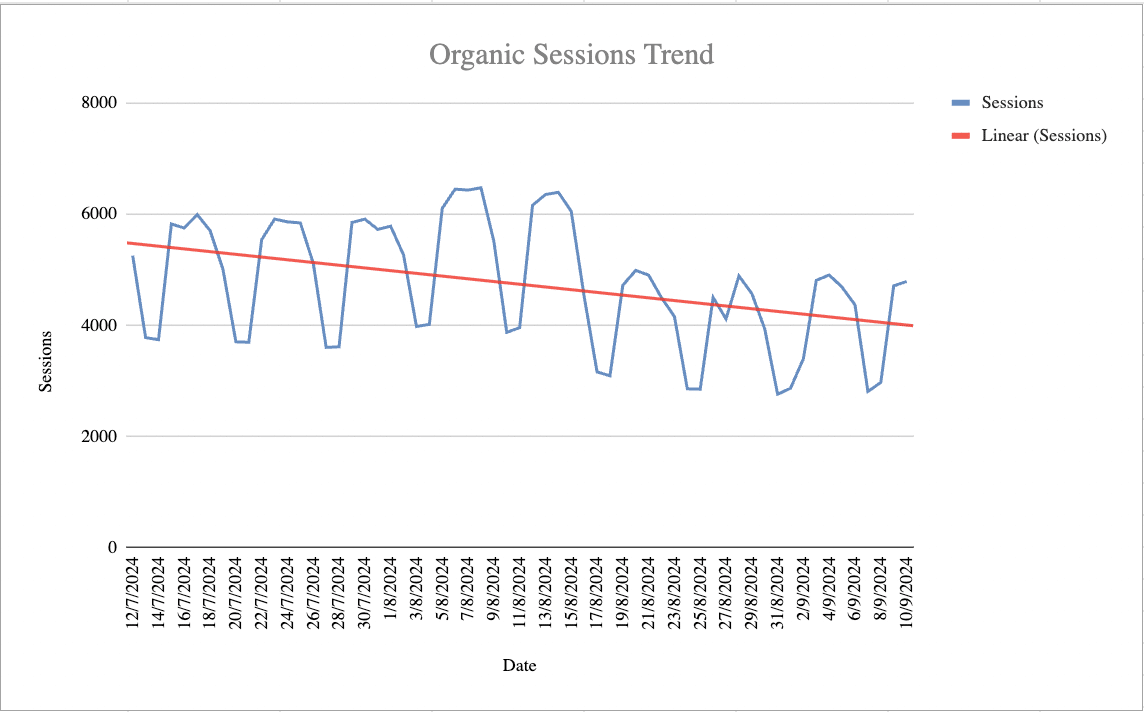

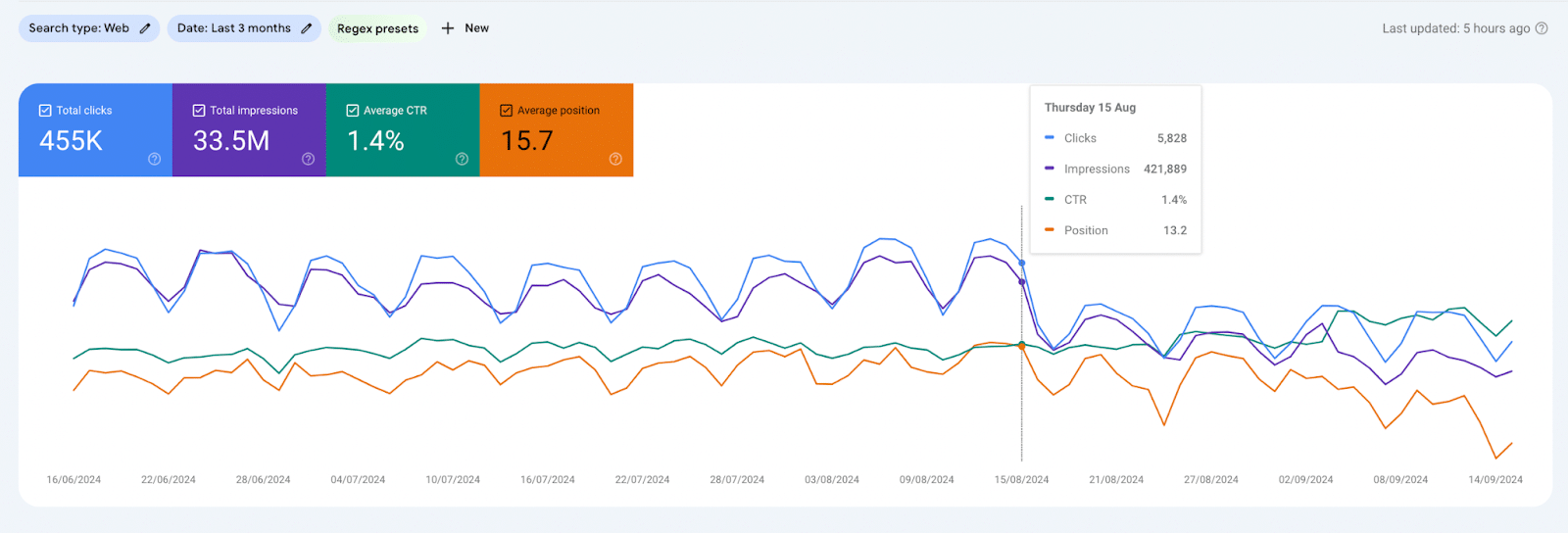

We examined their Google Analytics 4 (GA4) and Google Search Console (GSC) data to assess organic trends and overall traffic patterns.

This holistic approach is crucial because it establishes a baseline for our analysis. For instance, if we observe a significant drop in overall traffic, we can infer that the decline is likely not solely attributed to organic search issues.

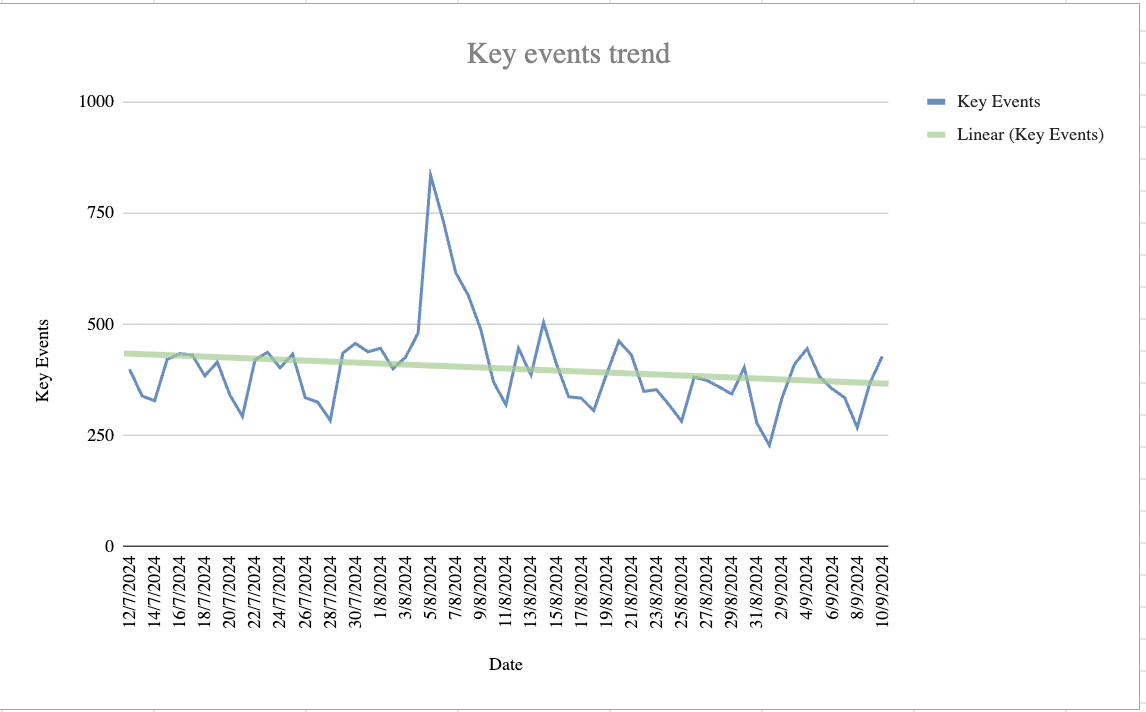

We noticed a slight decrease in sessions overall and no impact on conversions. This was particularly noticeable in August (the timing of the Google core update), suggesting the update may have played a role and the culprit was likely organic.

The hypothesis was soon confirmed by digging into organic data in both GA4 and GSC.

Traffic was down significantly, both looking at GSC and GA4.

Interestingly, just like overall traffic, the organic conversions were much more stable.

It was clear that the issue wasn’t the website’s relevance to its audience; those who visited it were converting. However, the overall number of users hitting the site had significantly decreased.

Before reviewing the rankings, we segmented the data by country to identify where the impact was most pronounced.

This prompted us to ask an essential question: do we care?

In our case, one of the countries most affected was India. While it would have been easy to dive into auditing this market for rankings, we recognized that the devil is in the details.

iOS is not the dominant operating system in India. In 2023, Android had a 95.17% market share, while iOS only had 3.98%.

Since our client’s solution only works with iOS, India is not the right market to focus on. Instead, we should concentrate on the U.S., which is their most relevant and larger market.

Overall content health

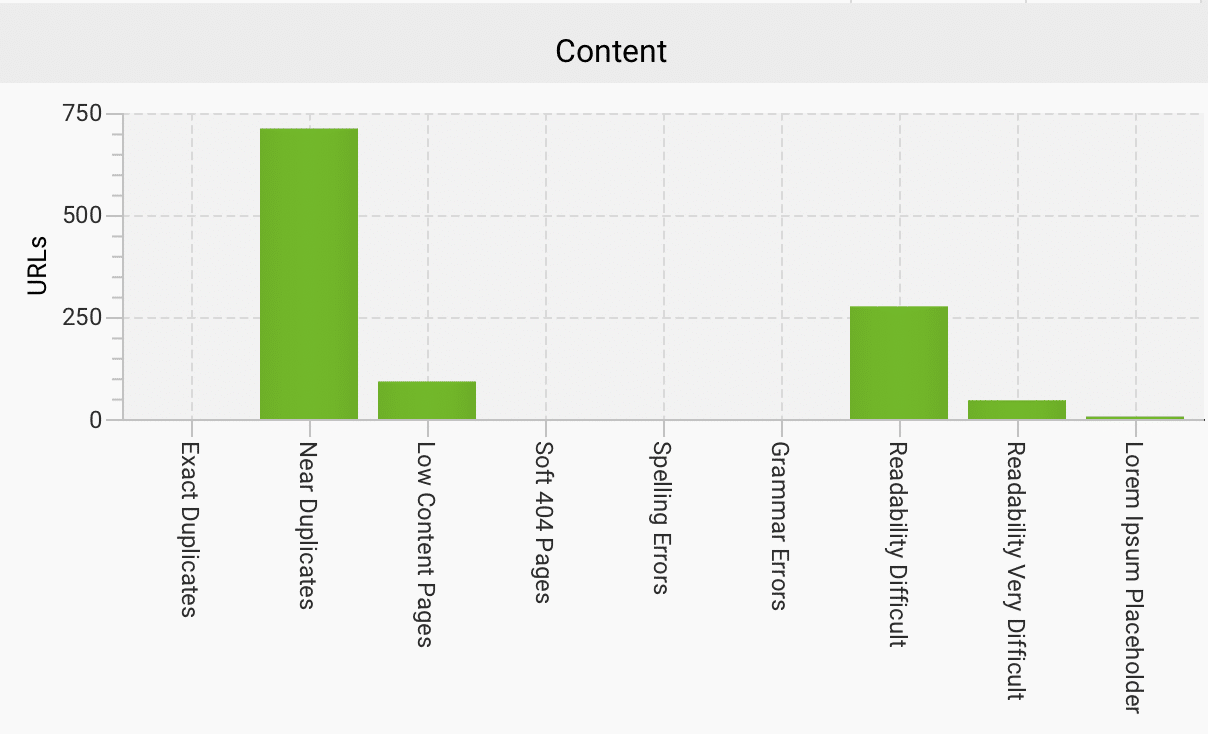

The second sitewide signal we explored initially is overall content health.

This gave us an initial understanding of possible issues with content at scale. We used Screaming Frog content analysis for this.

To use any tool effectively, it’s crucial to identify what truly matters in the vast amount of data you’re presented with.

In this case, it would be easy to focus on the wrong metric, like readability.

While some pages may have readability challenges, this aligns with our technical audience’s expectations.

The more pressing issue wasn’t readability but the significant number of near duplicates. Most of the affected pages were in the template directory. This was a key insight for segmenting the data based on website structure.

Note: This analysis differs from evaluating what Google defines as helpful content. There are ways to evaluate content at scale for helpfulness, mainly using Google’s NLP API to pick out entities and analyze sentiment. Something we currently have in the pipeline.

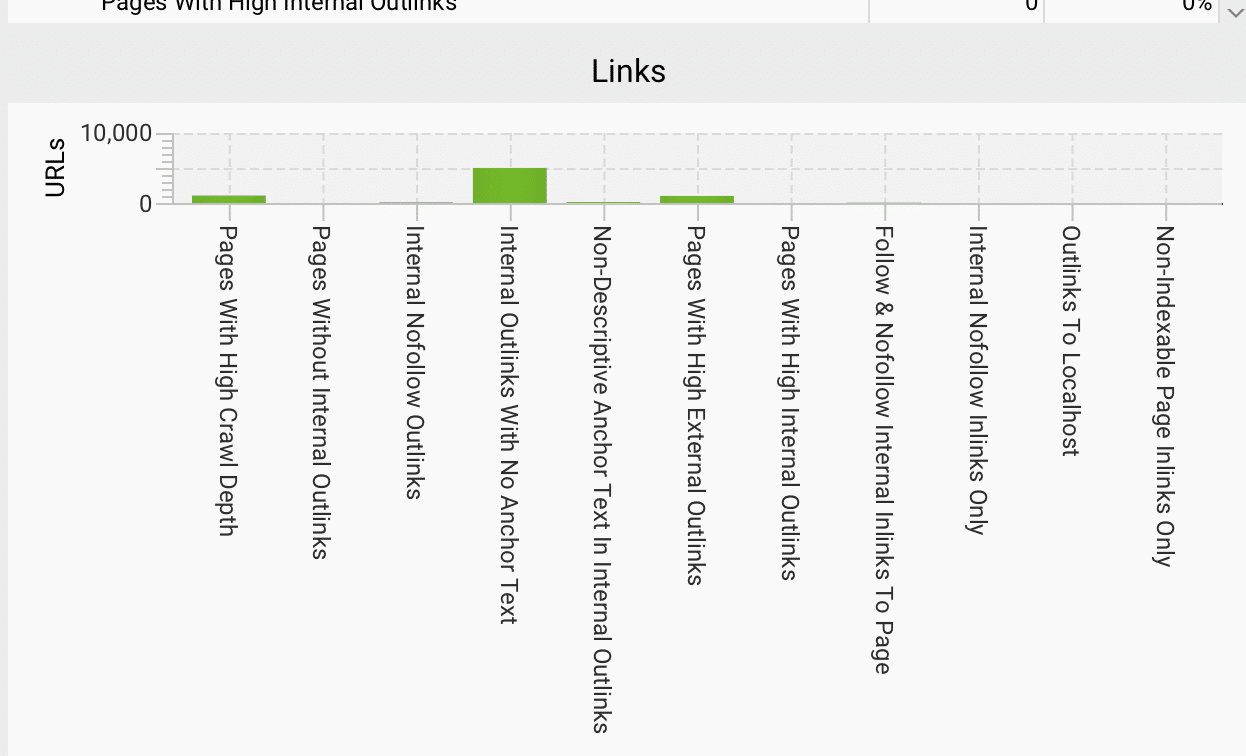

Link profile

The client had already said they wanted to enhance their internal linking at Stage 2 of the project.

For now, our focus was on understanding the situation, which we accomplished by using Screaming Frog.

Overall, the links looked healthy. We found valuable insights for improving our SEO strategies.

For example, when we examined the issue of internal outlinks with no anchor text, we discovered it originated from a specific template. While it’s not a top priority, it’s an easy fix at the template level.

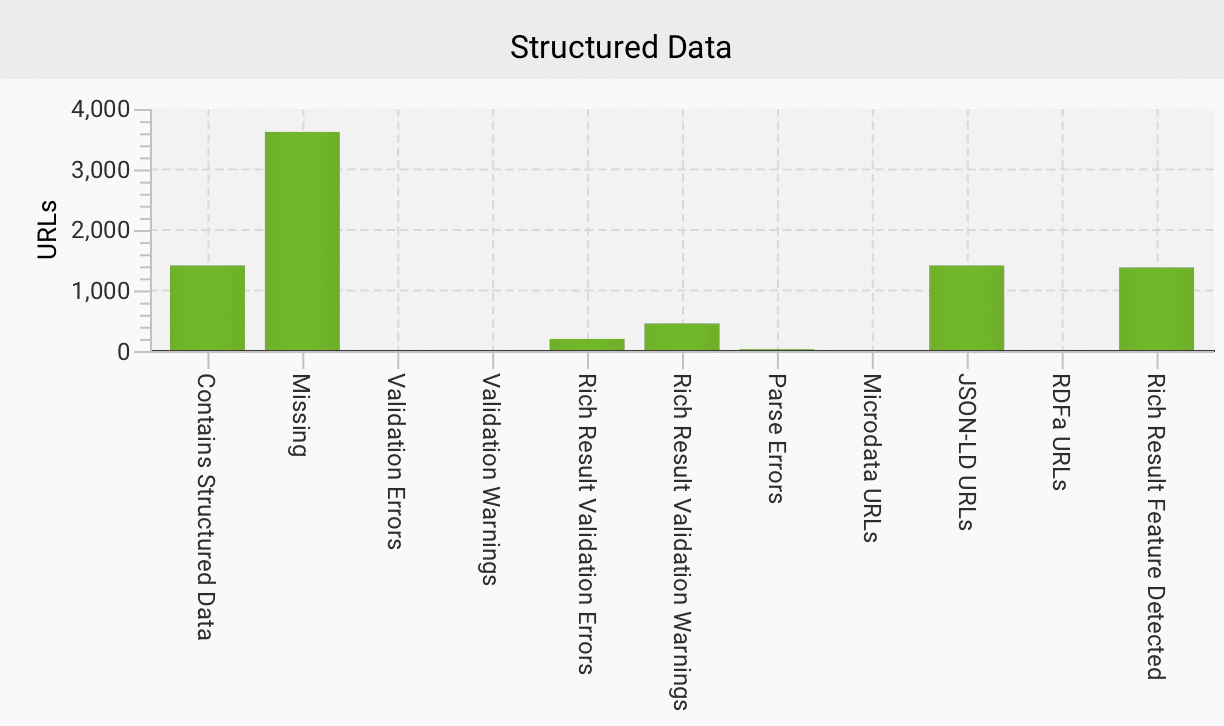

Technical SEO

Not every technical fix will affect rankings, no matter how interesting they may be.

By this point in the analysis, I suspected the real issue was in the content and changes in the SERPs.

I’ve seen many websites struggle after the latest Google updates, and we suspected that Reddit and AI Overviews were taking over the search results.

From what we’ve observed, AIO functions similarly to a featured snippet. Therefore, one area we should focus on is structured data markup.

This Screaming Frog analysis helped us identify missing structured data at the template level and reveal any obvious gaps.

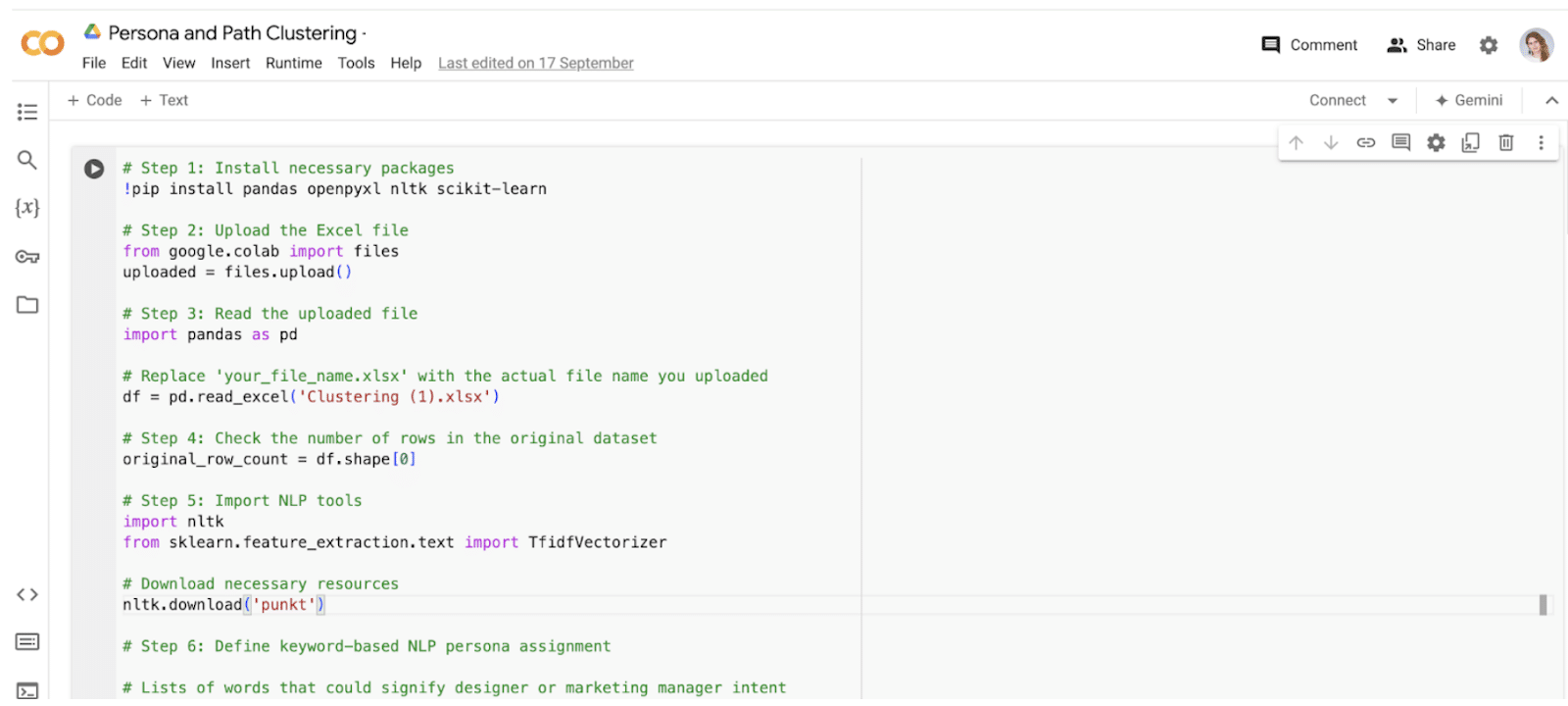

Wild card: Persona-based sitewide signals

Creating well-defined personas can be beneficial for SEO and overall marketing.

The client expressed concern that their recent expansion of personas, as they shifted focus to a different market segment, may have diluted their website’s authority.

While I don’t believe proprietary metrics such as DA or AS are a ranking factor, the recent Google API leak did indicate that Google has its own understanding of domain authority.

I can see why a company might think that expanding into a new market could make Google question its target audience.

From a technical perspective, this concern aligns with how semantic SEO works – websites must focus on delivering closely related content.

To determine if our client faced challenges with this new vertical, we used a combination of tools:

- Ahrefs to export the keywords and pages ranking in the U.S.

- A Python script that employed NLP to analyze the pages and assign a persona.

The findings were intriguing: most pages were assigned to both personas, indicating the content was closely aligned.

This suggested that the website didn’t have the problem of straying too far from its core topic.

Use the sitewide indicators to segment the data for deeper analysisSo far, everything suggests that the website remains valuable and that the traffic drop is not due to sitewide issues.

- We already identified some challenges linked to specific page sections, such as the template directory.

- We know that most traffic comes from the blog section.

However, with thousands of pages involved, it raises the question: where do you even start?

Using exports from various sources (GA4, GSC and the Ahrefs Top Pages report), we mapped the data to analyze the impact at the keyword level.

This was the labor-intensive part of the process. It involved extensive data wrangling with index-match functions and pivot tables.

Although it was tricky, this approach enabled us to identify priority areas for deeper analysis, including:

- Creating a label based on % drop following the core update

- For pages with a drop in session >= 20% or conversions >=20% – High

- For pages with a drop in session >= 20% or conversions >=20% – Medium

- For pages with a drop in session >= 20% or conversions >=20% – Low/None

- Key event + keyword volume

- Priority based on conversions >10 + volume

While not foolproof, this analysis provided us with an initial list of 42 pages to examine more closely.

It’s important to note that all the metrics involved depend on various factors, one of which is the publication date of the posts.

Newer blogs may not have built up enough authority, and they could have been initially boosted after publishing but later declined due to a lack of user signals, such as click behavior – something we suspect is related to NavBoost.

Conversely, knowing which pages were older helped us identify those that needed refreshing.

By default, Screaming Frog does not extract blog publish dates, so we needed to set up a custom extraction. This involved copying the selector from the date element using a tool like Google Chrome’s Inspect feature.

Once we had the publication dates alongside our list of pages, we could conduct a more in-depth analysis.

Deep dive into the most affected pages and sections of the websiteThis is where the process becomes manual. We examined the affected pages to identify any patterns, and it quickly became apparent that one existed.

Unfortunately, our suspicions were confirmed: the primary cause of the traffic drop was the change in the SERPs in the U.S. and U.K., driven by the rise of Reddit and the inclusion of AI Overviews.

Reddit emerged as a clear winner from the recent core update, significantly impacting many of the client’s keywords.

Additionally, the expansion of AIO to cover even more queries – particularly in the client’s sector, where research indicates over 20% coverage – has worsened the situation.

AIO tends to impact informational queries more than commercial ones, which explains the loss of visibility in the blog sections.

Like many others, our client is losing valuable traffic due to Reddit and AIO, not because their website is any less helpful. Unfortunately, this is not an easy fix.

Another area we examined closely was the templates directory. Although this section wasn’t driving much traffic or conversions, it was worth investigating for two reasons:

- The client had invested significant time in deploying it.

- The initial analysis indicated issues with its implementation.

Similar to the priority blog pages, we noticed a pattern after reviewing a few template pages.

These pages conflicted with search intent, and their content could be improved.

While search intent is typically categorized into four main groups, I believe in taking a broader and more nuanced approach to understanding it.

Someone searching for a template typically wants to see several options to choose from. They intend to find a few templates, pick one they like and use it.

Google recognizes this. For instance, if I search for “Instagram template” or “Instagram templates,” the top results come from companies offering a collection of options.

Unfortunately, this wasn’t taken into full consideration before the pages were launched.

Rather than optimizing collection pages that speak to the intent, the client optimized the individual template pages.

Additionally, the individual pages had very sparse information for users. Not that tweaking this would make a difference in situations where the intent is not aligned.

Get the newsletter search marketers rely on.

Business email address Sign me up! Processing... Fixing the ‘unfixable’It was clear that our initial recommendations to the client needed a holistic approach, as there was no quick fix for the issues we identified.

To address these challenges, we recommended:

- A few quick wins and tests.

- Developing an improved strategy that considers these shifts.

- Closely measuring AIO and rethinking SEO KPIs.

Everyone seeks the ideal “low effort, high impact” solution. So, before tackling the complex issues, let’s focus on the quick wins.

The analysis revealed some interesting tests we could try, specific areas for improvement and several technical recommendations.

We suggested that the client optimize an initial content piece by reviewing and adjusting it to better align with E-E-A-T guidelines.

I’ve also become increasingly interested in the concept of information gain as a factor in Google’s ranking system.

This makes sense, especially in the age of AI, where new data for training models is highly valuable. We will consider all of these elements when running the test.

The audit clearly showed that the template directory needed a rethink. With a few adjustments, it could become a valuable source of traffic and conversions.

We first suggested revisiting the keyword strategy to better align with user intent, which would set the stage for optimizing those pages.

The audit also identified a few quick wins on the technical side, particularly in Schema markup.

Rather than marking up everything on the site, being strategic about which Schema to implement is key.

For instance, if you’re a SaaS product with excellent reviews, using Review Schema is essential. Our client had several opportunities to use this Schema to enhance their content.

Developing an improved strategy that considers these shiftsThe client needs to rethink their strategy, though the details aren’t fully clear yet, despite some initial ideas.

One option is focusing on user-generated content (UGC). Reddit thrives on UGC and Google seems to favor it.

However, UGC is not enough for the client to compete with Reddit. It should be part of the strategy, but not the main tactic.

Another option is diversifying the keyword strategy. While expanding into less competitive search terms where Reddit and AI-generated content don’t dominate might help, it’s a short-term fix.

Even though I’m skeptical about AI hype, it’s clear AIO isn’t going away and may actually become more prominent.

The real question is whether this shift is good or bad for users and companies. Ultimately, any strategy must account for how search is evolving.

As AI plays a bigger role in the early stages of the user journey, our client – and others – will need to rethink the kind of content they produce.

Whether AIO guides searchers or they directly use chatbots like ChatGPT, many will only reach your website during the decision stage. This changes how we approach strategy and SEO overall.

While targeting commercial keywords remains important, the focus will shift more toward building a brand and creating diverse content, like video, to rank in video SERPs and on YouTube.

Some of the content that’s lost rankings for our client could perform well in video format.

I don’t see these changes as negative. Relying solely on Google was never a good strategy.

Now, strategies must go beyond SEO and integrate with broader digital marketing efforts. As someone who values full-stack marketers, I believe this shift opens up exciting opportunities.

Dig deeper: Search everywhere optimization: 7 platforms SEOs need to optimize for beyond Google

Closely measuring AIO and rethinking SEO KPIsOur client already ranks in AIO for some terms, but we can’t measure the impact on CTR since it’s not trackable in GSC or GA4 – and likely never will be.

According to Liz Reid, the Head of Google Search:

- “And we see that the links included in AI Overviews get more clicks than if the page had appeared as a traditional web listing for that query.”

This may or may not be true overall. We know our client was losing clicks and that AIO clicks are, in any case, a different user experience.

Measuring AIO clicks has been a gray area, but it’s crucial to address.

We recommended expanding the client’s SEO tech stack to include platforms that help with tracking.

While they currently use Ahrefs, which doesn’t yet support AIO tracking (though it likely will soon), switching to a tool like Semrush would be a logistical challenge for such a large site. Instead, we suggested supplementing with ZipTie.

However, measurement goes beyond tools – it’s about rethinking KPIs.

While traffic, conversions and revenue remain important, brand KPIs and micro conversions are needed now more than ever.

With search and data privacy changes, tracking every click isn’t feasible.

Building brand resilience and focusing on smaller touchpoints, like newsletter signups and engagement rates, can help fill the gap. As the market evolves, so must our KPIs.